We hope you’re enjoying learning more about the ins and outs of AI and its capabilities so far. Today, we’ll be taking a closer look at generative AI. Generative AI, or gen AI for short, is a hot topic of discussion right now, no matter the industry or vertical. There’s a lot of hype around it and also a lot of misunderstanding.

Gen AI, when harnessed ethically and responsibly, holds enormous efficiency potential, particularly within clinical development. . However, it needs to be monitored and managed by at least one human at all times. Let’s take a closer look at gen AI to better understand what it is and how it works.

What is gen AI?

Mckinsey provides a clear but succinct definition of generative AI as “algorithms (such as ChatGPT), that can be used to create new content, including audio, code, images, texts, simulations, and videos.”

Gen AI models can generate new and original outputs including text, images, video, and code based on the data they are trained on. There are three subsets of gen AI: foundational models, large language models, and conversational AI.

- Foundation models – broad AI models that act as a base or foundation for building and/or training other applications.

- Large language model – a type of foundation model that is trained exclusively on text data for generating language output. Examples include GPT-4 by OpenAI, LaMDA by Google, BERT by Google, and LLaMA by Meta.

- Conversational AI – a type of AI that replicates and produces human conversations using natural language processing (NLP). Examples include ChatGPT by OpenAI and BARD by Google.

What are large language models?

With the introduction and rapid spread of ChatGPT, large language models (LLMs) have become embedded in popular discussion. Let’s take a closer look at what they are and their capabilities.

Essentially, LLMs are text-oriented gen AI models that are trained on large volumes of text to interpret textual inputs and generate human-like textual outputs.

LLMs all have unique characteristics based on its training that define what the model is capable of doing, for example, some LLMs trained on code in addition to normal text that allow them to generate code and even solve physics equations. The more parameters an LLM has and more diverse data it has been trained on, the more complex and varied its capabilities are. An LLM can have up to 540 billion parameters!

How does gen AI work?

Gen AI works based on tokens. Tokens are basic units of text or code that LLMs use to process, understand, and generate text. Depending on the prompt submitted, tokens can be words, parts of words, parts of sentences, or characters. The model will assign a number to each token which, in turn, is given a vector that helps to determine the probability of the output token.

Let’s break this down into an example. If you type “The student studied in the” into an LLM, the LLM assigns a certain number of tokens to the prompt. These tokens help the model predict that the most probable word to follow would be “library” as opposed to “waterpark”, which it would then generate as the tokenized output.

In this example, the model recognized that the most important words in the phrase were “student” and “studied”. That’s because LLMs are trained to focus on specific parts of an input to guide the selection of the output.

What is prompt engineering?

Prompts are central to gen AI. Prompt engineering is a natural language processing (NLP) concept that involves discovering inputs that yield desirable or useful results. The quality of the inputs determines the quality of the outputs in prompt engineering, meaning that the more comprehensive and concise the prompt is, the more likely you are to receive the answer you’re looking for from the model

Designing effective prompts increases the likelihood that the model will return a response that is both favorable and contextual

Types of prompting

There are three categories of prompting for LLMs: zero-shot learning, one-shot learning, and few-shot learning.

Zero-shot learning consists of a prompt that contains no examples, for instance, “Convert the word “cheese” from English to French”.

One-shot learning consists of a prompt that contains one example for the model. “Convert the word “cheese” from English to French. For example, “sea otter ->loutre de maar.”

Few-shot learning contains more than one example in the prompt submitted.

“Convert the word “cheese” from English to French. For example, “sea otter ->loutre de maar and peppermint -> menthe poivrée.”

Conclusion

It’s important to remember that just because there’s a lot of hype surrounding gen AI, it can make mistakes. This is why it’s vital to always keep a human in the loop when implementing and managing gen AI in all business operations.

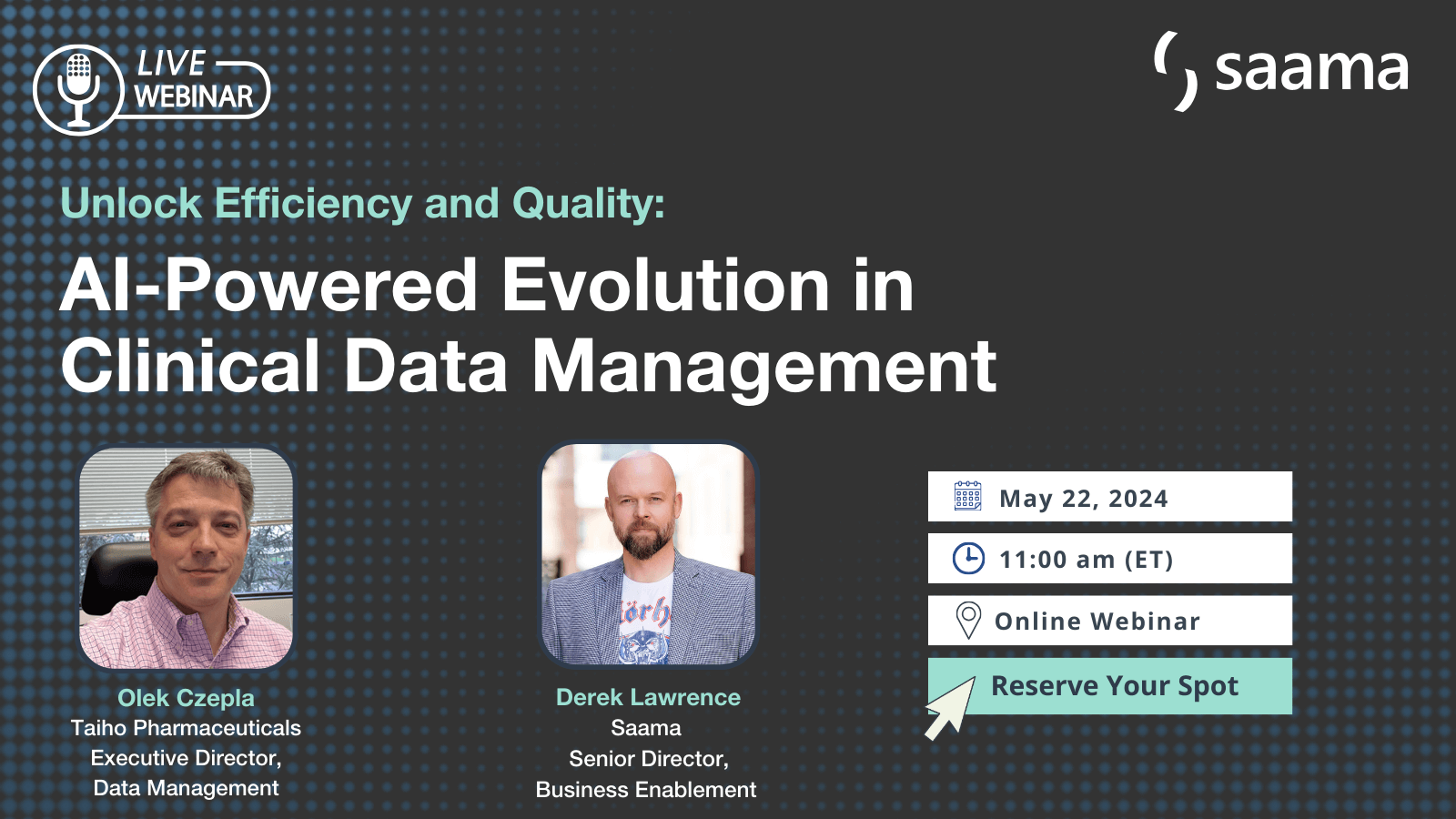

Learn more about how gen AI can augment and benefit clinical development in our blog. If you want to learn more about how Saama utilizes gen AI in its proprietary suite of AI solutions for clinical trials, book a demo and we’ll show you.